GAN-Engine¶

| Runtime | Docs / License | Tests |

|---|---|---|

|

|

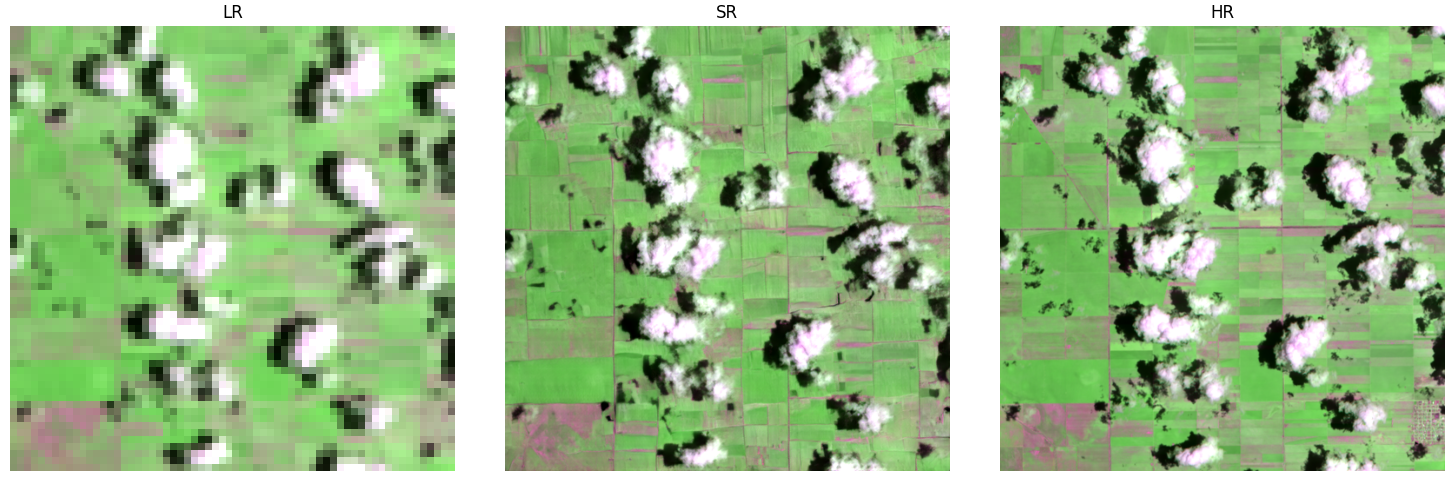

GAN-Engine is a general-purpose adversarial research environment. It began with super-resolution and is expanding into inpainting, conditional and unconditional image generation, and text-guided synthesis. The architecture is intentionally modular: if your data can be described as channels (plus optional masks or prompts), GAN-Engine lets you normalise it, condition on it, and model it without rewriting the training loop.

What makes it different?¶

- Task agility. Switch from super-resolution to inpainting or prompt-driven generation by choosing a different YAML preset.

- Composable architectures. Swap generator or discriminator families, layer in attention, register new modules, or wire up diffusion-ready adapters without touching trainer code.

- Rich conditioning. Mix class labels, segmentation masks, temporal context, or text embeddings to guide generation.

- Perceptual losses that understand your bands. Assign which channels feed into VGG, LPIPS, CLIP, or custom extractors so hyperspectral, medical, or grayscale data still benefit from perceptual guidance.

- Robust training loop. Warm-up phases, adversarial ramps, mixed precision, gradient accumulation, EMA, and Lightning 1.x/2.x compatibility are baked in.

- Experiment telemetry. Automated logging, validation panels, and checkpoint summaries keep researchers and stakeholders in sync.

These docs track the roadmap toward multi-task generative modelling. Expect super-resolution instructions alongside previews of inpainting, conditional, and text-to-image tooling. Each release adds templates and references for the new capabilities.

Choose your adventure¶

| Goal | Where to start |

|---|---|

| Install the toolkit | Getting started |

| Understand the module layout | Architecture |

| Configure experiments | Configuration |

| Prepare datasets | Data |

| Launch training | Training + Training guideline |

| Run inference or sampling | Inference |

| Inspect Lightning internals | Trainer details |

| Explore benchmarks & samples | Results |

Typical workflow¶

- Duplicate a template config. Everything starts with YAML: pick a task, define the dataset loader, normalisation, conditioning signals, and architecture.

- Point to your data. Use the built-in dataset selectors or register your own loader for DICOM, NIfTI, Zarr, tiling pipelines, COCO annotations, or prompt datasets.

- Train with confidence. Kick off

python -m gan_engine.train --config <config.yaml>and monitor metrics in Weights & Biases or TensorBoard. - Validate and export. Swap in EMA weights, export to ONNX/TorchScript, tile huge scenes, or sample from unconditional models without leaving the CLI.

Ecosystem¶

GAN-Engine is designed as the backbone of a broader generative stack. Companion repositories will host task-specific assets (prompt datasets, mask generators, diffusion adapters) while this core focuses on reusable infrastructure. Contributions that expand the ecosystem are welcome!